One of the main problems we encounter when speaking with manufacturers about the use of AI in manufacturing for quality control, predictive maintenance, predictive analytics or human-machine interface simplification is not a technical one: it’s trust.

- “How do we know that the algorithms give us the correct answer?”

- “Doesn’t the model just tell you what you want to hear?”

- “I have done this job for 23 years, I just know if something goes wrong, does the AI model?”

These are just a few examples of the concerns we hear about implementing AI. They all come down to the basic question: how does one trust AI? These concerns are understandable and valid because the concept of a black box that gets fed a lot of data and out comes an answer is uncomfortable. As humans, we want to understand how and why the algorithm reached this particular conclusion, not just blindly trust it.

So how can we address this unease and help people to take the first, often tentative steps to gain trust?

Here are two ways to build trust, and both are important.

Performance Builds Trust

Nothing is as convincing as good performance. If a model has shown that time after time it made the right call, found defects that were previously overlooked, correctly categorized defect types or alerted you to equipment issues in time to prevent failure and line downtime, you will automatically learn to trust its capabilities.

We have seen this play out with one of our customers where whenever there is any doubt or discussion around a quality issue, the default question is “What does the model say?” It wasn’t always so. Initially, many weren’t convinced that the model could match let alone improve upon the status quo.

So, every time the model called a defect an employee would double-check by hand and find that, yes, the model had made the correct call. Over time the model proved itself beyond a reasonable doubt and has become the final decider for all decisions.

The issue with this approach is that it takes time and a lot of effort. In the case of our customer, it took a good year to go from “I don’t trust AI!” to “What does the model say?” but now, nobody doubts the model’s performance anymore.

SHAP Values – Explaining what Drives AI-Generated Results

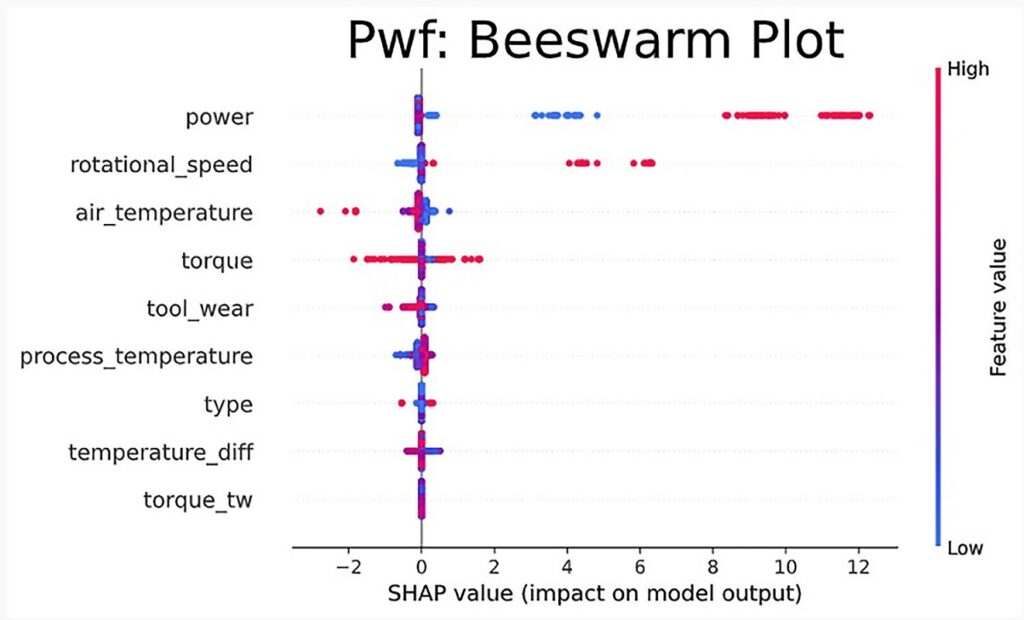

Luckily, there is another tool that can help building trust by adding some transparency to the process: AI explainability. There are different AI explainability tools but SHapley Additive exPlanations – SHAP for short – is the most well-known and de facto default solution for machine learning algorithms. While SHAP values don’t explain every step of the AI decision making process, they show how each feature affects the final prediction, the significance of each feature compared to others, and the model’s reliance on the interaction between features. (1)

With that, SHAP values pinpoint the most important features and their influence on the prediction. Conversely, they also show you which factors aren’t important to the outcome and therefore you don’t have to spend time and money to optimize them.

AI Fun Fact

SHAP values were originally developed in the context of game theory as a way to fairly distribute the payout of a game among the players. In the context of AI the input factors are the “players” and the “payout” is the model’s prediction. SHAP values make sure that each input factor/player receives their fair share of the payout/prediction.

The Importance of SHAP Values for Manufacturing

But how is this applicable to manufacturing? Let’s look at an example: a synthetic dataset specifically developed for this purpose at the Hochschule für Technik und Wirtschaft Berlin with some modifications. (2)

Fourteen typical features of a production line, such as power, torque, ambient and process temperatures, are included in the dataset and their contribution to three different modes of machine failure are established using SHAP.

The figure below shows a bar chart that lists the importance of each factor to the machine failure. In this specific case, power, air temperature and process temperature are by far the most critical parameters and therefore the ones that need to be considered when optimizing for the remaining useful life of the equipment. Under the given circumstances, the other factors are negligible.

Using SHAP to Model Production

SHAP values can also be used to model different conditions. For example, if a goal is to keep power usage low for a more sustainable production (instead of increasing it to optimize remaining useful life) the contribution of the other factors will change. “Playing” with the conditions makes it possible to gain deep insights into what factors machine failure and help finetune the input parameters to optimize remaining useful life within given constraints.

It will be a while until everybody in the plant will ask “What does the model say?”. However, starting the process of implementing selected AI solutions sooner rather than later will help people become familiar with their capabilities and learn to trust them. In addition, AI explainability can be used to make the rationale behind the model’s recommendation more transparent – and transparency builds trust.

We are here to help you deploy AI-based solutions in your plant. Contact us to discuss how we can support you.

If you still wonder whether now is the right time to embark on the AI journey, here is a blog for you.

Learn more about how to help transition your company to AI

References

(1) An Introduction to SHAP Values and Machine Learning Interpretability

(2) Stephan Matzka, School of Engineering – Technology and Life, Hochschule fuer Technik und Wirtschaft Berlin, 12459 Berlin, Germany