AI models learn by seeing examples. That’s AI’s defining superpower; instead of painstakingly defining an object at the pixel level you can just show it some pictures and it will learn to identify that object. However, even if it is a superpower, it doesn’t come without challenges.

The challenge lies in the word “some”. “Some” can mean many hundreds, especially when you are training a model to find small defects, such as tiny imperfections on metallic surfaces. Generating hundreds of these images and annotating them can take up significant time and resources.

Pre-training your model and transfer learning can provide a short-cut. But just how far can they take you in training your model for a custom application?

Here is what we learned in the process of training a model to detect miniscule (<0.5 mm) surface defects on high-end rims for cars.

Step 1: Standard Pre-Training

New models know nothing. They are effectively blank slates. In a sense they are like a newborn baby who needs to learn everything from observation. And just like babies, you don’t get started on different car models or word puzzles but on basic shapes, colors, characteristics (smooth, rough, soft, scratchy), etc., you pretrain a model to recognize basic shapes, colors, surface characteristics, lighting conditions, etc. using established image libraries like ImageNet [1].

This takes you part of the way. A pretrained model has an easier time learning your more complex problems since it starts with an understanding of basic shapes, colors and other characteristics and has even learned to discern more complex features such as eyes or blossoms.

The beauty is that in many cases you don’t actually have to pretrain the models yourself because open source pretrained models are available for a number of applications.

Step 2: Training Your Model with Specific, Relevant Examples

What the model hasn’t learned is identifying the specialized features you are looking for, like that tiny inclusion on the high-end rim or a small smudge on a glass surface.

The next step is training the model with the actual examples – your products with tiny inclusions or smudges – so it learns to recognize them reliably. Given that the model has already learned the basics, fewer examples are sufficient to fully train it.

This can make a massive difference. Assume, for example, that you want to teach your model to categorize defects rather than just recognize them. It is easy to collect data about defects that occur frequently to teach the model, but rare defects are a different story. It can take weeks or even months to collect more examples and therefore reducing the number of examples needed can dramatically shorten the timeline to deployment.

Overall, this process works well; using this process, AI models become very proficient at doing what you have trained them to do.

But just how universal is that learning? Can a model that finds a paint defect on a rim also detect surface defects on other surfaces it wasn’t trained on? If so, how well?

Transfer Learning – how Good Is AI at Generalizing Specific Lessons It Learned?

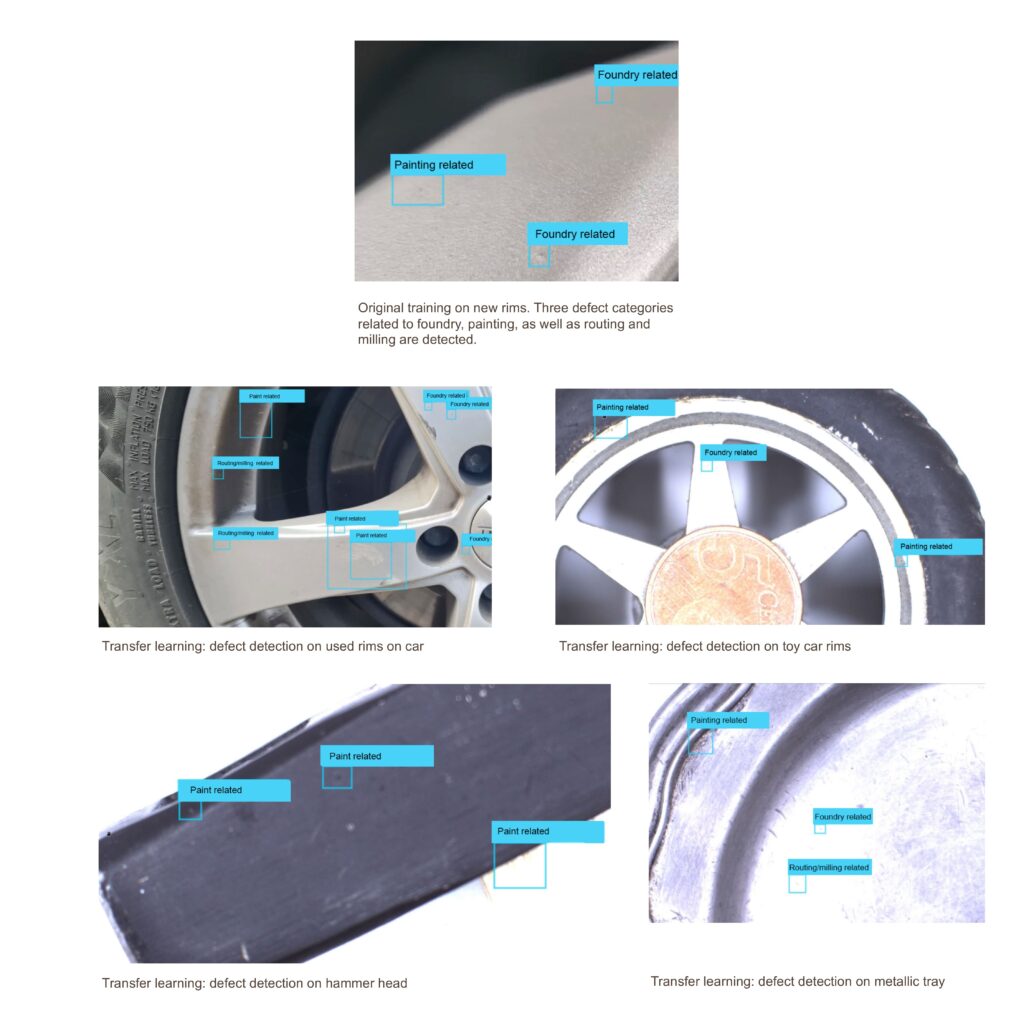

To find out, we used images of several hundred small surface defects, characterized them into three main categories (foundry-related, painting-related and routing/milling-related), annotated the pictures, then fed them into our model.

We then used a set of new images which the model had previously not seen to establish that it had learned to detect and categorize these defects well.

Then, we challenged the model: we showed it images of other metallic objects with surface blemishes, e.g. other rims, a metal tray, several tools, the rims of a toy car, a gas bottle made from metal, etc.

Was the model able to transfer the learning from the high-end rims to everyday objects? Here are some pictures of what the model returned.

In short: the model detected surface defects it learned on one object on all the other objects.

Well, mostly.

Lessons Learned for Applications in Manufacturing

Here is what learned from this work specifically for the application of transfer learning in manufacturing:

Transfer learning works: a model trained on a specific task can apply this learning to other, related tasks.

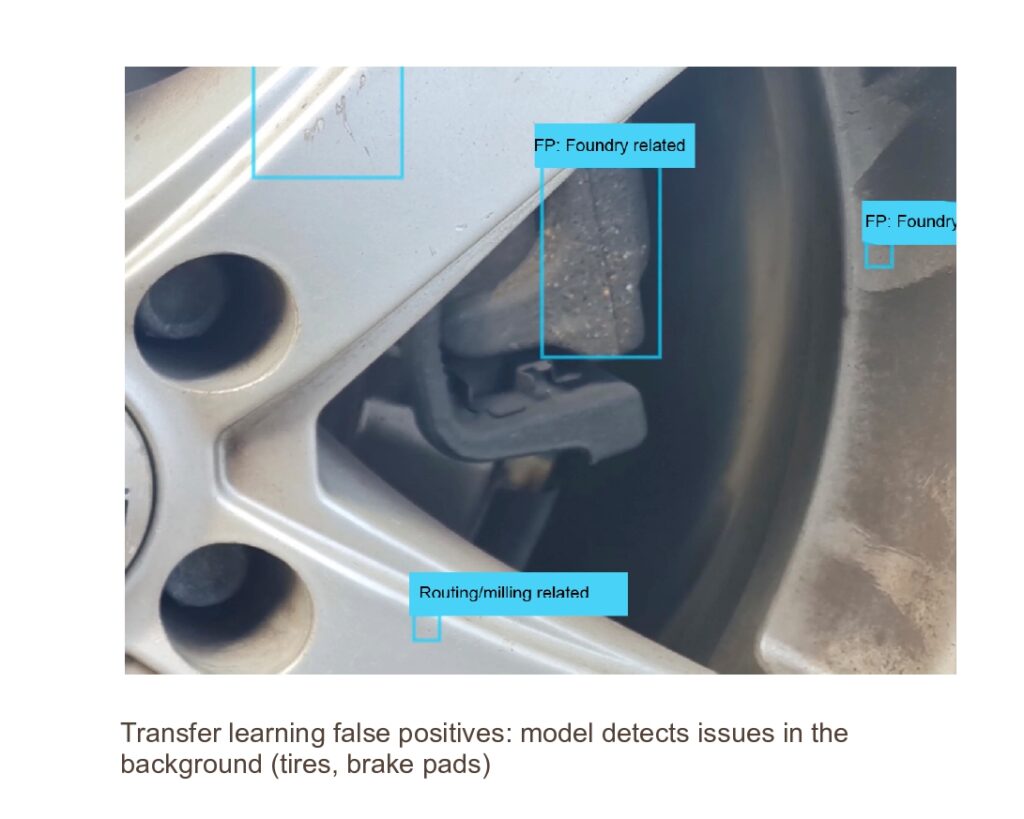

Transfer learning isn’t perfect: there are false positives and false negatives, which means that some level of training on the specific task, e.g. the actual surface and defect type, needs to happen before a model is truly optimized for that highly specific task. Especially demanding tasks, like zero defect requirements in manufacturing, necessitate customization of models and an AI platform that can manage training, re-training and in general the life cycle of models.

An obvious first step to take it to teach the model where to look for defects and where not to look. In our example, the algorithm found plenty of problems with the tires that showed on some of the pictures (they were trained on defects on rims coming of the production line, not rims surrounded by tires as in real life). Defining the background that the model should not consider will reduce the number of false positives significantly.

Transfer learning is a great shortcut to a custom model, but it does not remove the need to train models on the actual object and task. Completely pre-trained AI solutions that work out-of-the-box do not exist.

We have developed such an AI tool. Contact us to discuss how our AI Bot can support you in developing powerful AI models for your specific applications.

Learn more about AI for QC by reading our article about The Role of AI Models in Visual Inspection

References

[1] ImageNet: A Pioneering Vision for Computers. Published by History of Data Science, Aug. 27. 2021